Table of Contents

Today the majority of businesses and organisations are running some or all of their IT operations in the public cloud. Recent surveys show that over 90% of businesses are doing this today, meaning the public cloud is an essential part of their IT infrastructure and how they deliver technology and services to their users and customers.

However, for organisations that need to run HPC applications, the choice between continuing to run workloads on premise or shifting them to the cloud can present a number of questions and challenges that need to be addressed before making the leap from running HPC locally in your own data centre to running in the public cloud.

In this article we take a closer look at the benefits of running HPC workloads in the cloud as well as the challenges you may face, in order to help you make educated decisions as to whether HPC in the cloud is right for your specific workloads and business.

Traditional Approach to HPC

Traditionally, HPC has been run as an on-premise resource, using either large SMP machines or clusters of servers running Linux in order to provide the computational performance required to run demanding scale out workloads. This is because a typical HPC workload places significant demands on the compute hardware and infrastructure that supports it, meaning many organisations choose to keep HPC running in their local data center.

It may be that your application requires a low latency network, access to high performance storage or other HPC technology that would otherwise restrict the performance of your applications. There may be other factors that limit your ability to run workloads in the cloud such as data volumes, software licensing restrictions or other challenges which have limited the appeal of migrating your HPC to the cloud.

However with a number of major cloud providers offering specific HPC services and capabilities, complex HPC systems can now be built and deployed successfully in the public cloud. By leveraging the different compute instance types available, offer fast CPUs, high memory per core ratio, accelerators and GPUs, and access to high bandwidth low latency network interconnects and storage, organizations can take full advantage of the public cloud for HPC workloads.

Benefits of HPC in the Cloud

Running High Performance Computing workloads in the cloud brings a number of benefits for organizations that have changing needs and demands coming from their HPC User community and allows to benefit from the following advantages:

Bursting HPC Workload into the Cloud

To maximize return on investment of your on-premise HPC system, you want your system to be over-subscribed, meaning the cluster is running at maximum utilization with a small backlog of workloads. Whilst this delivers ROI for the capital investment of the HPC system, it can often mean the on-premises HPC capacity is too limited to run all the HPC jobs that are required at the time.

This means important jobs have to wait for access to resources, simulations have to be scaled back, or projects cannot proceed fast enough leading to costly delays. In such cases, supplementing your HPC capacity with on-demand cloud resources enables you to complete projects faster, run larger and more complex simulations and handle urgent demands for access to HPC resources to meet critical deadlines.

Bursting to the cloud allows you to be more agile by being able to react to dynamic business requirements, that place varying demands on your HPC resources.

Capacity and Capability to run HPC Workloads

It often happens that capacity to run larger workloads is not available. Jobs requiring a higher number of cores either exceeding on-prem resources or facing an always busy on-prem HPC cluster have issues to find a slot. Adding HPC resources in the cloud on-demand overcomes such kind of restrictions and to be able to match HPC job requirements which often happen more seldom but are important as well.

Adapt the Hardware Resources to the individual HPC Job

Running an on-premises HPC cluster often results in a compromise between the average hardware configuration suitable for a mix of different applications or a specific configuration that is designed to run a particular application or workload very well. This can result in poor application performance due to the compromises being made to accommodate all of the applications being used.

In the cloud, the hardware can be configured to perfectly suit the application requirements, allowing for the perfect match between hardware and workload. If your codes require a very large amount of memory, or would benefit from accelerators not found in your on premise system, you can configure a cloud HPC environment specifically to meet the needs of the workload.

Many different compute hardware/instance types are available, with some offering up to 128 physical cores and connected with a high speed low latency inter-connect. Machines with large amounts of memory per core, fast local NVMe disks, FPGAs and GPUs provide a wide range of options to optimize the job runtime based on the most appropriate hardware.

Testing and benchmarking new Hardware

Access to recent hardware (CPUs, GPUs, inter-connects, …) for testing and running benchmarks is easily available in the cloud. Multi-GPU servers e.g. with high-end GPUs cost 100+k USD while being available on-demand in the cloud at a couple of 10s of USD. ISV and other codes can be tested on recent GPUs as well as inter-connects on demand to understand performance benefits. New processor architectures can also be tested like e.g. ARM-based processors which often offer attractive price-performance.

Save Money and Time on expensive ISV Software Licenses

ou can use the cloud to run expensive ISV software codes on often significantly faster hardware than on-prem. Typically commercial ISV software codes are expensive so it makes perfect sense to run those licenses on the fastest available hardware.

Local hardware often has a life cycle of 3 years so running the latest expensive ISV code on aged hardware can cost significant money due to longer run times. In the cloud you can find instances with an all-core-turbo frequency of up to 4.5GHz. It is important to free up expensive licenses as fast as possible for the next job.

Archive Result Data

Downloading the large output data after post-processing typically in the cloud takes time and usually causes cost via egress fees so leveraging cheap cold storage backup solutions in the cloud offers attractive prices and avoids any downloads of result data. Cloud providers offer different types of cold storage with high durability depending e.g. on access speed and frequency.

Parallel File System as a Service

In case your HPC cluster needs a high-throughput parallel file system cloud vendors or service providers offer parallel file systems as a service. AWS FSx for Lustre or Weka.io e.g. support sub-millisecond latencies, up to hundreds of gigabytes per second of throughput, and millions of IOPS supporting POSIX interfaces after a few clicks. Multiple deployment options and storage types including linking to object storage optimize cost and performance for your workload. These services free you up from maintaining the parallel file system yourself.

Drawbacks of HPC in the Cloud

Cost of HPC in the Cloud higher than On-Premises

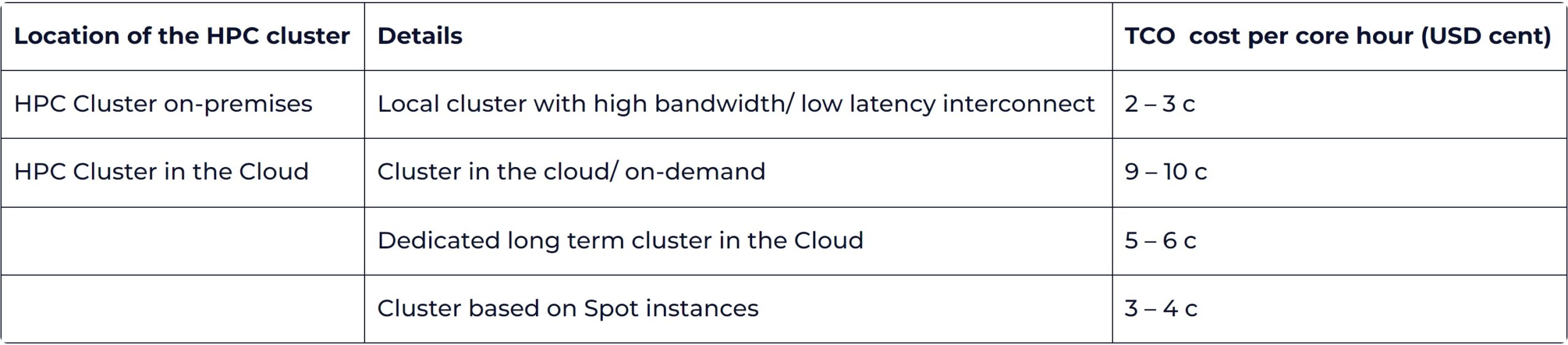

If you have a well-designed local cluster, the costs of acquisition and running it are lower than in the Cloud. The costs of running an HPC Cluster in the Cloud can vary significantly depending on the type of cloud instances you use to create your HPC Cluster.

If you just need an HPC cluster for a short time, you use on-demand instances to create the cluster. When you have finished your job, you delete those instances. The costs of such a cluster can be as much as 5 times higher than that for a local cluster. If you have a long term contract for say one year with a cloud provider, the costs can be much lower, but it is still more expensive than a local cluster. If you want the cheapest price for a Cloud instance, you go to the spot market. You may have to wait for Cloud instances that meet your requirements and the resources might be taken away any time but it might happen infrequently based on the utilization of the cloud instances. The costs of spot instances are about 2/3 lower than on-demand instances and closer to local cluster costs.

Overview of coreHour costs (cost per core per hour) for HPC clusters on-prem and in the cloud

Performance in the Cloud

The performance of an HPC cluster in the Cloud highly depends on the configuration. In the cloud often virtual machines are used with hyper-threading turned on (vCores) and the network infrastructure might be different. Depending on the overhead of the virtual machine infrastructure and in case there is no specialized hardware leveraged for I/O, … it might be of interest to reserve e.g. 2 cores of the cluster for the hypervisor. This will reduce the compute power available.

An HPC cluster typically needs a fast interconnect. If no Infiniband infrastructure is available the latency will typically be higher, so larger models with a higher number of cores might not scale as good as with the lowest latency available. In the cloud Infiniband infrastructures are available or substitutes based on standard TCP with an optimized network stacks and additional hardware (e.g. EFA in AWS).

Data Gravity keeps Data in the Cloud

Data, especially the amounts of data that are used in HPC, cannot be moved around easily. It takes time, even on fast connections, to download 50 or 100 GByte for post-processing, and this is typically only for one HPC job. The best solution is often to keep the data in the cloud and post-process on cloud instances with or without GPUs leveraging high-end remote 2D/3D access via NICE DCV. The data size in the cloud increases making it more difficult later to move data back to your local infrastructure. Moving data from the cloud back to on-premises also comes at a cost: the data egress cost.

Data Egress Costfor downloading Data from the Cloud

Another issue that makes it more difficult to move data out of the cloud on a local infrastructure is the costs you have to pay to get data out (egress) of the cloud. You do not have to pay to get data into the cloud, but the costs to get the typically larger output data back can be high. This also adds to the Data Gravity: once the data is in the cloud it becomes more difficult to get it out. It is typically faster and cheaper to do post-processing in the cloud.

Example of egress cost and transfer times:

- Typical egress cost to download 100 Gbyte: ca. 7 USD

- Download time of 100 Gbyte via company internet connection: ca. 28h via 10Mbit/sec, ca. 5.5h via 50Mbit/sec, ca. 3h via 100Mbit/sec

- Post-processing on GPU instance (T4) for 40 hours (working week, on-demand pricing): ca. 35 USD (includes ca. 6 USD egress cost)

ISV Licenses – Terms and Network Access

Many companies run ISV codes on their HPC clusters. Often the license costs for these codes exceed the costs of the hardware they run on. Therefore, it can be advantageous to run them in the cloud where the machines may be faster than your local servers. However, integration of a cluster in the cloud with your infrastructure in such a way that you can use your ISV licences in an optimal way is a challenge and not always possible.

Some ISVs do not allow you to run codes on machines that are more than 20-30 miles/kilometres away from your main location. Other ISVs have different legal restrictions in place. There are also ISVs that have floating licenses, managed by a license manager. This type of licenses can also be used in your Cloud infrastructure, provided that they always have a connection to the license manager. This requires a proper network set-up.

Sometimes you can also split your license pool in two parts, each with its own lisence manager: one sub-pool for use in the cloud and another for your local cluster. This way you may lose some flexibility. Before moving data and workloads to the Cloud, you have to carefully examine both legal and technical issues related to licenses for your ISV codes.

Secure Your Networking into the Cloud

Having some of your clusters in a cloud, and others on premise in your own data center poses some security challenges. A standard technique used is VPN. Setting up a VPN from your cluster in the cloud into your local data center is a way to secure communications. This way the cluster in the cloud from a network point of view nicely extends into the Cloud. Using ssh to login to the cluster is generally considered to be too insecure.

However, the complete seamless integration of a cluster in the cloud into your local company infrastructure is quite complicated and involves corporate networking and policies.

Split HPC Clusters – On-Premises and in the Cloud

If we combine all benefits and drawbacks, the typical advise is to not try to integrate cloud nodes seamlessly into the local on-prem cluster. The most efficient way is to set up a separate cluster in the cloud due to job and data transfer complexities, licenses integration, dynamic allocation of cloud resources into the HPC cluster, inhomogeneous access strategies for users.

Hybrid HPC – Best of Both Worlds

There are Pros and Cons of using HPC in the Cloud. Often it is not attractive to move all HPC workloads to the Cloud to leverage advantages due do e.g. existing hardware which needs to be written off, disadvantages described above and to first make steps learning about HPC in the Cloud for the most attractive and suitable workloads.

A typical feasible approach is “Hybrid HPC”: move part of the HPC workload to the Cloud most suitable for HPC resources in the cloud and keep part of it on-premises in the own data center. In the previous sections we took a closer look at Data, Data Gravity, Egress Costs, networking, and ISV licenses.

All these aspects need to be considered when deciding the optimal strategy for which workloads to move to the Cloud, and what to keep in your local on-premises HPC Cluster. In the next sections we highlight a couple of topics relevant to Hybrid HPC.

Extend the On-Premises HPC Cluster or create new HPC Cluster?

It looks attractive to extend the existing on-prem HPC cluster into the Cloud but there are several issues related to this approach.

The first problem is related to dynamic enlarging and shrinking of the HPC cluster in the Cloud. The scheduler used in the HPC Cloud environment typically needs to be able to cope with dynamic re-sizing of the cluster to take advantage of the cloud bursting benefits. Extending the on-premises cluster with a dynamic cluster extension can create several issues to the on-prem HPC cluster.

The second problem is related to HPC jobs and data integration. Often the on-premises workflows depend on high-speed file servers to provide the input data on the fly. This is not an option for the HPC jobs running in the Cloud due to the much lower network bandwidth. Mounting file shares for large amounts of data is too slow.

Because of these issues typically a separate HPC cluster is setup in the Cloud.

Seamless Integration of HPC Workflows

In your local data center, you most likely have a shared file system that can be used by all HPC jobs. When a job needs input data it simply gets it from that file system. Results are written back to the file system. This works because the network inside your data center is fast.

The network bandwidth connecting to an HPC Cluster in the Cloud is much slower and cost to synchronize data can be high. Extending your shared file system into the Cloud is in general not possible for most applications, because the performance decreases dramatically.

Often the following two-step approach for HPC workloads makes sense: The major amount of data needed in the Cloud is cached typically in the form of include files which can be updated in case they change. These are typically re-used by several different jobs as part of the larger workload of individual or groups of users. Required include files can be automatically staged to the HPC cluster in the Cloud as they are required by the respective jobs: they are transferred once, and made available semi-permanent (cached) to the HPC cluster in the Cloud.

Each individual HPC job typically has an input file with parameters specific to the job. Many different HPC jobs may use the same include files, but the input file is specific to a job. As input files are usually in the range of kBytes they can be sent along with the job and can be uploaded for each individual HPC job to cope with the bandwidth restrictions.

To align with the existing HPC processes the preparation of a job is typically done on the on-premises systems such as the user’s workstation:

- Pre-Processing and Preparation of the HPC job

- Submit the job into the scheduler including 2 options

- The scheduler can be tuned to automatically decide where the job will be routed to – the on-prem HPC cluster or the HPC cluster in the Cloud, or the

- user decides if the job shall be run on-prem or in the Cloud when the job is submitted.

- In case the job is routed to the Cloud upload input files and other accompanying files. Automatically identify include files that need to be available and

- use cached include files if already available in the Cloud, or

- upload include files into the cache

- When needed, grow the HPC cluster to provide the necessary HPC capacity to run the job

As explained before, whether you download the results directly to your local on-premises environment and do the post-processing there, or do post-processing in the cloud, depends on the size of the output files and the optimal post-processing workflow.

User Authentication

Users of the HPC systems, whether the systems are in the own data center or in the Cloud, should be able to access them with the same credentials to authenticate. This can be achieved by creating an authentication environment in the Cloud, for instance by an LDAP server and Active Directory (AD) server and federate that with your main authentication service in your local data center. The services in the Cloud are configured to receive and synchronize user credentials from the main, on-premises authentication services.

We look forward to discuss with you about your plans for Hybrid HPC.

HPC as a Service

Several HPC as a Service like Rescale, Nimbix, Simscale, … offer cloud resources readily configured for HPC purposes including HPC applications and where needed or applicable their respective ISV license as a bundle.

The HPCaaS pricing typically includes the cloud resources as well as the hourly cost of ISV licenses which can be selected in an easy to use web interface. As HPCaaS bundles all services together and the providers need to earn money as well the overall cost is often higher than building the solution yourself – again there is the advantage of bursting on-demand jobs, capability of running HPC jobs which cannot be run on-premises, leveraging high core frequencies and others highlighted above.

Often the input data will be uploaded via web interfaces and afterwards the output data downloaded via the web GUI as well in case the data needs to be preserved. Post-processing is typically part of the HPCaaS offering via visualization services supporting efficient and high-end remote 2D/3D desktops supported by NICE DCV.

Resources related to HPC in the Cloud

AWS

- HPC on AWS

- AWS HPC Workshops

- HPC Tech Shorts Videos

- ParallelCluster – Build HPC Environments on AWS; AWS ParallelCluster on Github

- HPC Connector – HPC Cloud Bursting from On-Prem; Tech Short Video: EnginFrame with AWS HPC Connector

- FSX for Lustre – Parallel File System as a Service

- Overview of AWS instance types

- Overview of AWS resources and prices: https://instaguide.io/ (not maintained anymore)

- Simple Monthly Calculator and AWS Pricing Calculator

Let’s discuss Your HPC Strategy

Our team at NI SP is happy to schedule a call contributing more than 20 years of HPC experience to our discussion. If you have any questions, comments or want to dive deeper on and discuss your HPC strategy on-premises and in the cloud please let us know!